The world of generative AI video has felt like a two-horse race for months. You had the industry darling, Runway, and the quiet, enigmatic powerhouse, OpenAI’s Sora, looming over everyone. We’ve seen incredible clips, debated their potential, and waited for widespread access. But while all eyes were on Silicon Valley, a new contender was quietly training in the background. Now, it’s here, and its doors are opening.

Meet Kling, the AI video model from Kuaishou Technology, the Chinese tech giant behind the TikTok rival Kwai. It didn’t just enter the ring; it burst through the ropes, showcasing capabilities that directly challenge, and in some cases, surpass what we’ve seen from Sora.

Kling can generate up to two minutes of 1080p video at 30 frames per second from a simple text prompt. Let that sink in. Two full minutes. That’s double the current demonstrated length of Sora and a massive leap from the short clips we’ve grown accustomed to.

But is length the only thing that matters? In this in-depth review, we'll break down what makes Kling tick, how it stacks up against the competition, and what it means for creators and businesses now that it's becoming more accessible.

At its core, Kling is a text-to-video diffusion model, but it’s the engine under the hood that’s so impressive. It’s built on a 3D Spatiotemporal Transformer architecture, a fancy term that essentially means it understands not just objects in a frame (space) but also how they should realistically move and interact over time (temporal).

This is Kling’s secret sauce. While other models create beautiful, cinematic shots, they sometimes struggle with the basic laws of physics. An object might slide unnaturally, or a person’s movement might feel just a little bit 'off.' Kuaishou’s team focused heavily on this, leveraging a sophisticated variation of the same architecture that powers Sora to simulate complex motion with startling accuracy.

The result? Videos where animated elements don't just appear on screen, but interact with their environment and with each other in a way that feels fundamentally grounded in reality. Objects possess a convincing sense of weight and momentum, and actions unfold with a natural flow, greatly enhancing the overall believability of the generated scenes.

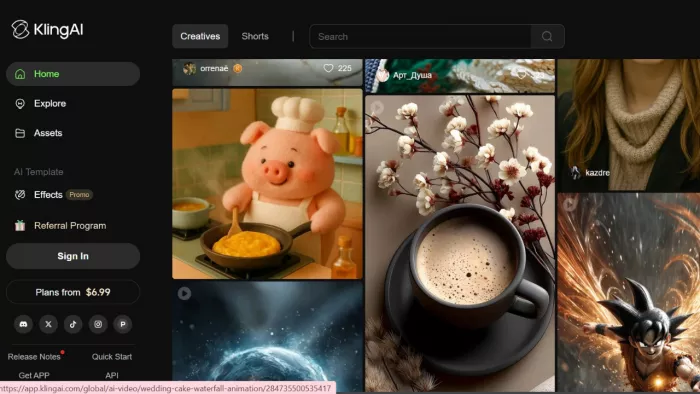

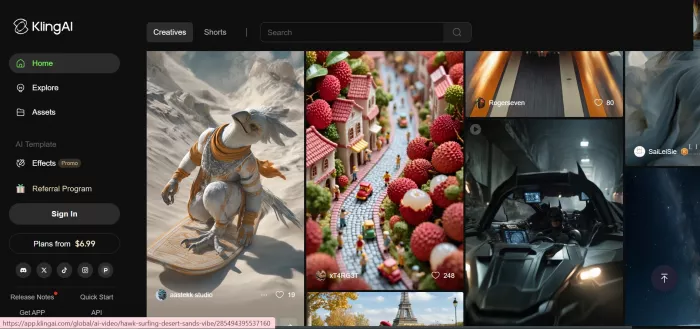

Crucially, after an initial waitlist period, Kling is now openly available within Kuaishou's video editing app, Kwaiying (快影). This move has allowed a much wider audience, particularly in its primary market, to begin experimenting with the tool, giving us a much clearer picture of its real-world performance beyond curated demos

Kling isn’t just a one-trick pony. It brings a suite of powerful features that position it as a top-tier generative video tool.

So, how does Kling really stack up against the big names like Sora, Runway, and Pika Labs? Let’s break it down with the latest access info.

| Feature | Kling AI | OpenAI's Sora | Runway Gen-2 | Pika Labs |

| Max Video Length | Up to 2 minutes | Up to 1 minute | Up to 16 seconds | Up to 3 seconds (extendable) |

| Max Resolution | 1080p | 1080p | 1080p equivalent | 1080p equivalent |

| Framerate | 30 fps | Variable (up to 60 fps) | 24 fps | 24 fps |

| Core Strength | Physics simulation, long duration | Scene consistency, realism | Creative tools, cinematic styles | Character consistency, lip-sync |

| Accessibility | Openly available (via Kwaiying app) | Closed access (red teamers only) | Publicly available (paid tiers) | Publicly available (paid tiers) |

| Developer | Kuaishou | OpenAI | RunwayML | Pika Labs |

As you can see, Kling immediately becomes a leader in video length and matches Sora in resolution. Its focus on physics offers a different flavor of realism compared to Sora's cinematic coherence. Most importantly, its open availability within the Kwaiying app gives it a massive practical advantage over the still-inaccessible Sora.

Now that Kling is accessible, users are actively experimenting with its limits. A powerful AI model is only as good as the instructions you give it. A great video prompt is about layering details. You need to be a director, cinematographer, and set designer all at once.

Here's a breakdown of the anatomy of a powerful Kling prompt:

Subject: Who or what is the focus? Be specific. "A middle-aged man with glasses and a red jacket."

Action: What is the subject doing? The more descriptive, the better. "Voraciously eating a bowl of hot, steaming ramen noodles with chopsticks."

Setting: Where is the action taking place? "In a bustling, neon-lit Tokyo market at night."

Style & Mood: What is the visual and emotional tone? Use words like "cinematic," "hyperrealistic," "8K," "dramatic lighting," or "shot on film."

Camera Shot: How should the scene be framed? Use terms like "wide-angle shot," "close-up," "drone footage," or "handheld tracking shot."

A Prompt You Can Use (and Adapt)

Let's build a prompt based on this structure to create something complex and dynamic.

Prompt Example: A hyperrealistic, cinematic wide-angle shot of an astronaut planting a shimmering, holographic flag on the surface of Mars. The background shows the vast, red desert landscape with two moons visible in the dark purple sky. The astronaut's movements are slow and deliberate due to the low gravity. Dust kicks up from their boots with each step. Shot on 70mm film, with dramatic side lighting from the setting sun.

This level of detail gives the model everything it needs to understand the physics (low gravity), the lighting, the composition, and the overall aesthetic.

With the removal of the waitlist, reactions are no longer based on curated demos but on real-world user creations. The sentiment remains overwhelmingly positive.

Praise for Realism: Users consistently praise Kling’s grasp of physics. The way a backpack realistically sags on a boy’s shoulders or the way fire crackles and casts flickering light holds up even in user-generated content.

Excitement Over Length: For creators, the two-minute runtime is a proven game-changer. It has moved AI video from a "cool trick" into the realm of actual short-form storytelling.

Identifying Weaknesses: With wider use comes a better understanding of limitations. Users have found that, like all current models, Kling can sometimes struggle with maintaining perfect character consistency over very long shots or with complex hand movements. However, its overall performance is still considered state-of-the-art.

Calling Kling a "Sora-killer" might be premature, but it's undoubtedly the first true rival to step into the ring with comparable specs and one massive advantage: you can actually use it. Kuaishou has not just matched the hype; it has delivered a powerful, accessible tool that raises the bar on video length and physics simulation.

For businesses and tech professionals, Kling represents a monumental shift. It signals that the generative video space is not a monopoly. Fierce competition will accelerate innovation, drive down costs, and push capabilities forward at a blistering pace.

With its accessibility growing, the era of generative video is arriving faster than anyone predicted. Tools like Kling are moving beyond novelty and are becoming indispensable for marketing, entertainment, and education. The time to start learning the language of video prompting is now. Kling isn't just another AI model; it's a statement. It proves that groundbreaking innovation can come from anywhere and that the race to create the future of video is wide open.

Discussion