When I first started using Civitai, I did not approach it as an “AI art tool.” I came to it out of necessity. I was already using Stable Diffusion locally, experimenting with different styles, and kept running into the same problem: models behaved inconsistently, documentation was scattered, and it was difficult to understand why certain outputs worked while others didn’t.

Civitai entered my workflow less as a destination and more as a reference layer, a place to observe how other people were training, combining, and prompting models in real conditions.

Over time, it became something closer to a daily research environment than a casual website.

My initial reaction to Civitai was conflicted, bordering on resistant.

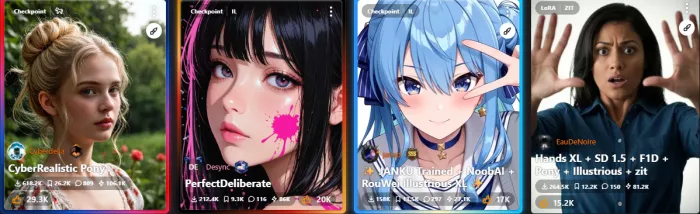

The scale was immediately apparent. Pages loaded with thousands of models, countless tags, and dense parameter panels. At the same time, there was a noticeable absence of guidance. No walkthrough, no “start here” path, no attempt to soften the learning curve. The interface assumes prior knowledge, and that assumption is non-negotiable.

Concepts like checkpoints, LoRAs, samplers, CFG scales, and base model compatibility are treated as baseline literacy. If you don’t understand them, the platform does not pause to explain or contextualize. That can feel exclusionary at first, especially when compared to more polished, consumer-oriented AI tools.

Initially, this felt like poor UX. With more time, it became clear that it is a deliberate design choice. The platform prioritizes exposure over abstraction. Instead of hiding complexity behind defaults, it presents the system as it is, trusting users to either learn or leave. That approach creates friction early on, but it also avoids the false sense of mastery that simplified tools often create.

1. Model Research (The Core Value)

Over time, model research became the primary reason I returned to Civitai.

Rather than downloading models impulsively, I began treating each model page as a case study. I would examine:

I also relied heavily on example images, not for aesthetics alone, but to analyze how prompts were structured in practice. Seeing how different users invoked the same model—sometimes successfully, sometimes not—revealed limitations that were never mentioned in the description.

Crucially, I cross-checked reproducibility. If multiple users produced similar results with comparable settings, that signaled a reliable model. If outputs varied wildly, it usually meant the model was sensitive, unstable, or highly context-dependent.

This process turned model selection from guesswork into evaluation. It reduced wasted time and helped me build a smaller, more dependable local model library.

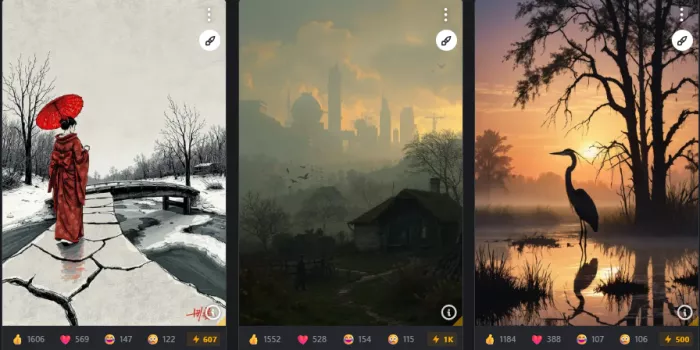

2. Image Feed as a Learning Tool

At first glance, the image feed feels overwhelming. It’s visually dense, fast-moving, and mixed in content type and quality. Without filters, it can be distracting and difficult to interpret.

Once filters are configured, particularly content tags, model type, and time range—the feed becomes something else entirely: a living dataset of real-world diffusion behavior.

Clicking into individual images reveals:

This level of visibility fundamentally changes how diffusion models are understood. Instead of reading abstract explanations, you see cause and effect directly. You start to recognize patterns: which prompts generalize, which models collapse under certain styles, and how parameter changes influence consistency.

Over time, this shifted my learning from theoretical tutorials to applied observation. I wasn’t memorizing rules; I was internalizing behavior.

3. On-Site Generation: Useful, but Secondary

I approached Civitai’s on-site generation cautiously, and my usage remained intentional rather than habitual.

Most of the time, I used it to answer very specific questions:

For these purposes, the system was effective. It removed environmental variables and allowed for quick validation. However, constraints were noticeable. Queue times fluctuated, generation limits required planning, and the Buzz system introduced an abstract cost layer that discouraged casual experimentation.

This reinforced the sense that on-site generation is meant for verification and exploration, not sustained creative work. Draft Mode stood out as a practical feature here, allowing faster, lower-cost outputs that were “good enough” for decision-making.

In practice, I treated Civitai’s generator as a diagnostic tool rather than a creative workspace.

What became clear after extended use is that these elements—model research, image analysis, and limited generation, are not separate features but parts of a single workflow loop:

Civitai doesn’t explicitly guide you through this loop, but once recognized, it becomes the platform’s underlying logic.

When I first encountered Buzz on Civitai, it felt unintuitive. There was no immediate mental mapping between Buzz and real-world effort or output. Generating an image consumed Buzz, but the cost didn’t clearly translate into time, quality, or computational value. At the start, I found myself hesitating—not because Buzz was scarce, but because its meaning wasn’t obvious.

Over time, usage clarified its role. Buzz is less a currency in the conventional sense and more a control layer. It regulates access to shared GPU resources, ensuring that no single user can dominate compute. At the same time, it introduces a soft incentive structure: contributing models, images, or engagement feeds back into your ability to experiment further.

The creator monetization aspect, converting Buzz to USD, felt secondary in practice. It exists, but it doesn’t dominate behavior. Most creators I observed seemed motivated more by visibility and feedback than by direct payout. In that sense, Buzz feels closer to a governance mechanism than an economy. It works, but it is not designed to feel friendly or intuitive. It requires acclimation, and until that happens, it creates mild cognitive friction.

The presence of NSFW content on Civitai is immediate and unmistakable. It’s not buried, and it’s not framed as an edge case. Early on, this was distracting. Even when my intent was purely technical, evaluating models or studying prompts, the surrounding content sometimes pulled attention away from that goal.

What became clear with time is that this is not accidental exposure. It reflects a deliberate stance: open distribution with user-controlled filtering, rather than centralized restriction. Filters exist, but they are opt-in and require active configuration. Until that configuration is done, the experience can feel noisy or unfocused.

From a philosophical standpoint, I understand the rationale. From a usability standpoint, it adds friction, particularly for first-time users, shared work environments, or professional research contexts. Once filters are tuned, the issue largely recedes, but that initial setup is not optional. Civitai assumes users will take responsibility for shaping their own environment.

Community interaction on Civitai is uneven, but when it works, it works well.

Some model pages contain genuinely useful discussion:

Other pages, by contrast, offer little beyond surface-level praise or silence. This inconsistency means you can’t rely on community input uniformly, you have to evaluate it case by case.

What stood out to me, though, is that when feedback is present, it is almost always grounded in actual outputs. Unlike abstract AI forums, comments here are anchored to images, parameters, and reproducible results. That makes even sparse discussion more valuable than lengthy speculation elsewhere.

Several frustrations became persistent over extended use.

Performance issues were the most noticeable. During peak traffic, page loads slowed, and generation queues lengthened. While understandable at Civitai’s scale, it disrupted workflow rhythm.

Interface clutter also grew over time. As features expanded, navigation became denser. Tools accumulated faster than they were organized, making some common actions feel heavier than necessary.

Another recurring issue was the absence of structured learning paths. Beginners are left to fend for themselves, but intermediate users, those who understand the basics and want to go deeper, also lack guidance. Progress depends heavily on self-directed exploration.

Finally, the platform relies strongly on community explanations rather than official documentation. This works when the community is active, but it creates gaps when it isn’t.

None of these issues broke the experience, but they consistently reinforced the idea that Civitai optimizes for capability over comfort.

Despite the friction, I kept returning, and not out of habit.

The first reason was transparency. Very few platforms allow you to see exactly how results are produced. Nothing is hidden behind opaque abstractions.

The second was reproducibility. Being able to inspect prompts, parameters, and model stacks turns AI generation from guesswork into something closer to engineering.

The third was breadth. There is simply no comparable place where this many community-trained models coexist, documented through real usage rather than marketing descriptions.

Eventually, it became clear that if my goal was to understand diffusion models, not just generate images, avoiding Civitai would actually slow me down.

Rating: 4.3 / 5

Not because the experience is smooth or welcoming, but because it is structurally honest.

Civitai does not pretend to be simple. It exposes complexity rather than masking it. That choice will push some users away, especially those seeking instant results. For users willing to engage deeply, however, the payoff is substantial.

For me, the value of transparency, reproducibility, and scale consistently outweighed the discomfort of friction. It’s not a platform I would recommend casually, but it’s one I continue to rely on.

After spending a meaningful amount of time on Civitai, my sense is that it serves a very specific type of user, and it does so intentionally. The platform doesn’t try to broaden its appeal by simplifying itself, which makes its audience boundaries relatively clear.

Well suited for:

Stable Diffusion users

If you already run Stable Diffusion locally, or at least understand how it works, Civitai fits naturally into your workflow. It assumes familiarity with base models, extensions, and parameter tuning. In that context, it feels less like a new tool and more like a shared reference environment.

AI artists who care about consistency

For users trying to maintain a repeatable style, character identity, or visual language, Civitai is unusually useful. The ability to study how others achieve consistency, through LoRAs, prompt structure, and parameter control, makes it easier to move beyond one-off results.

Researchers and advanced hobbyists

Anyone interested in why diffusion behaves the way it does will find value here. The platform exposes failure cases, edge conditions, and model quirks in a way that sanitized tools never do. For learning through observation, it’s hard to replace.

Developers experimenting with model training

For those training or fine-tuning models, Civitai functions as both a distribution channel and a feedback mechanism. Seeing how real users interact with a model, and where it breaks, provides insight that private testing environments often miss.

Less suited for:

Beginners looking for instant results

Civitai does not protect new users from complexity. There is no abstraction layer that delivers “good enough” results automatically. Without prior knowledge, it’s easy to feel lost or discouraged.

Users expecting guided workflows

If you prefer step-by-step paths, presets, or recommendations, Civitai will feel unhelpful. Progress here is self-directed. The platform provides tools and data, not direction.

Those uncomfortable with mixed or adult content environments

Even with filters, the platform’s openness requires tolerance for mixed contexts. Users who need tightly controlled or curated environments may find this uncomfortable or unsuitable.

Spending extended time on Civitai changed how I think about generative AI.

Before, diffusion models felt opaque. You adjust prompts, tweak parameters, and hope for better outputs, often without understanding why something worked. Civitai disrupted that pattern. By exposing prompts, parameters, and model stacks openly, it turned generation into something I could inspect, analyze, and reason about.

That shift mattered more than I expected.

Civitai is not enjoyable in a casual sense. It does not reward quick browsing or effortless creation. It demands attention, patience, and a willingness to engage with complexity. At times, it feels cluttered, noisy, or inefficient. There were moments where the friction was real enough to make me step away.

But it is also one of the few places where generative AI feels grounded rather than abstract, where results are tied to visible inputs, and experimentation leaves a trace others can learn from.

That combination is rare.

Civitai isn’t where I go to be impressed. It’s where I go to understand. And over time, that distinction is what made it a permanent part of my workflow rather than a tool I tested and moved on from.

Discussion