The store always looked busy.

Every time I passed by, there were people coming in and out. Cars stopped briefly, deliveries arrived, lights stayed on late. It had all the visible signs of success. The kind of place no one questions.

Then Placer.ai entered the discussion—not loudly, not as a presentation slide, but quietly, over time. And without ever declaring the store a failure, it slowly changed how people talked about it.

That was the moment I realized how Placer.ai actually works in the real world.

Placer.ai rarely appears at the beginning of a decision.

It shows up when something feels off but no one can explain why. Sales look fine. The location seems active. Yet confidence starts to wobble.

Instead of making bold claims, Placer.ai starts answering smaller questions:

None of these questions sound dramatic. But together, they start shifting the tone of conversations.

When I first used Placer.ai, I did what most people do. I opened the visit trend chart.

The numbers were solid. Flat, but stable. That felt reassuring.

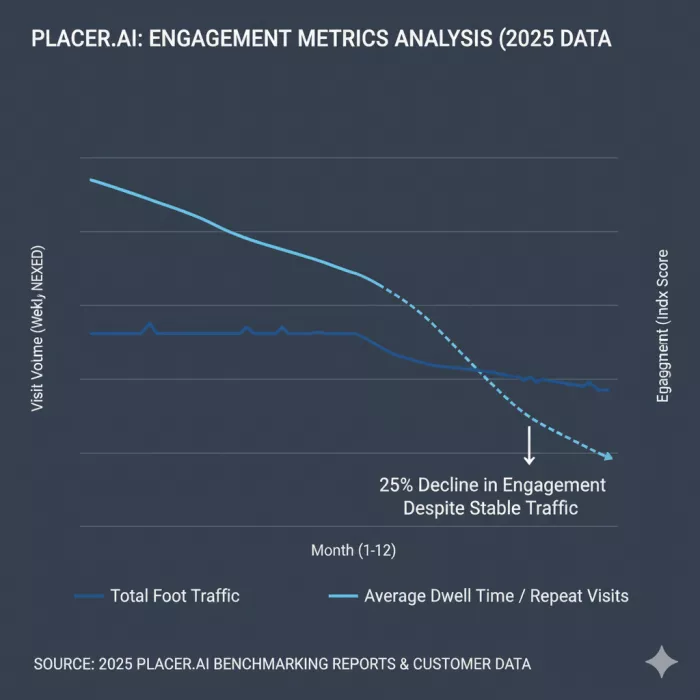

It took time to realize that Placer.ai isn’t built to reward surface-level checks. The real story sits behind the obvious charts, in the relationships between metrics.

A location can look healthy in isolation and fragile in context.

Placer.ai doesn’t highlight that difference for you—you have to notice it.

Over time, the word busy started losing meaning for me.

Placer.ai kept showing patterns that contradicted what the street view suggested:

That’s when I understood something important:

Placer.ai doesn’t measure popularity. It measures behavior.

And those two things drift apart more often than we like to admit.

One of the most surprising parts of using Placer.ai is how often it creates disagreement instead of clarity.

I watched two analysts look at the same Placer.ai dashboard.

One focused on stable traffic and seasonal recovery.

The other focused on shrinking trade areas and reduced dwell time.

Neither was wrong.

They were just answering different questions.

Placer.ai doesn’t resolve debates. It exposes assumptions.

Placer.ai almost never “wins” an argument outright.

Instead, it introduces doubt.

Someone says, “This location is doing fine.”

Placer.ai responds indirectly: “Compared to what?”

That small shift changes everything.

Suddenly:

Decisions don’t flip overnight. They soften, adjust, and drift in new directions.

One of the easiest mistakes to make in Placer.ai is assuming movement equals interest.

It doesn’t.

People pass through places for reasons that have nothing to do with preference. Commutes, errands, shortcuts, routines—all of these inflate traffic without creating value.

Placer.ai is clear about this if you slow down:

Ignoring those signals leads to confident but fragile conclusions

There were moments when Placer.ai told a story no one wanted to hear.

Traffic looked stable, but:

Nothing looked broken yet. But something had changed.

Placer.ai didn’t accuse.

It documented.

That made it harder to ignore.

What struck me most was how rarely Placer.ai was referenced directly.

No one said, “According to Placer.ai…”

Instead, outcomes changed quietly:

The tool didn’t dictate outcomes.

It altered confidence.

Placer.ai isn’t comforting.

It doesn’t simplify complexity into a single score or verdict. It presents multiple signals that sometimes point in different directions.

For teams comfortable with uncertainty, that’s powerful.

For teams chasing quick validation, it’s frustrating.

The tool assumes you’re willing to think.

Placer.ai doesn’t explain why people behave the way they do.

It won’t tell you:

What it does is narrow the search space.

It tells you where to look before things break.

After spending enough time with Placer.ai, I stopped trusting:

And started paying attention to:

That shift didn’t feel dramatic.

It felt inevitable.

Placer.ai isn’t the kind of tool that announces conclusions.

It works in the background, quietly questioning assumptions, waiting for someone to notice that what looks successful doesn’t always behave like it.

Once you’ve seen that gap a few times, it becomes very hard to go back to guessing.

And that, more than any feature, is how Placer.ai actually changes decisions.

Discussion